Sakai Admin Guide - Load Balancing and Scaling

Load Balancing and Scaling

Introduction

Although Sakai can be run on a single server which houses all database and filesystem content, many sites will want to consider growing their installation beyond the confines of a single server. This section of the admin guide will outline the basic approach to scaling up your sakai installation. When deciding which approach is best for you, you should consider the information below. Whatever solution you choose should be tested for performance and tuned as needed. For more information on tuning, see the Sakai Admin Guide - JVM Tuning and Sakai Admin Guide - Database Configuration and Tuning sections of the admin guide.

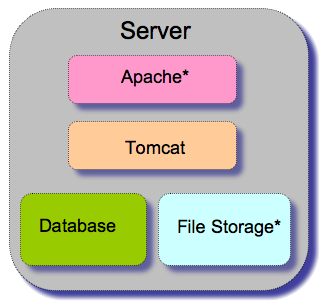

Standalone Server

The simplest option is to run all components of Sakai on a single server. As indicated by the asterisks in the diagram, Apache and filesystem storage are optional components, as Sakai can run with Apache (using Tomcat to handle requests directly) and can be made to store binary content in the database.

This configuration entails installing a database server locally or using the default Hypersonic SQL implementation bundled with Sakai. This option offers the least benefits in terms of scalability and redundancy.

However, this configuration is highly portable, and can be set up on a reasonably powerful laptop. Thus it is ideally suited for a standalone development environment, which allows a developer to write and test tools and modules for Sakai without even a network connection. This is also a good option when demonstrating Sakai in environments without reliable networking.

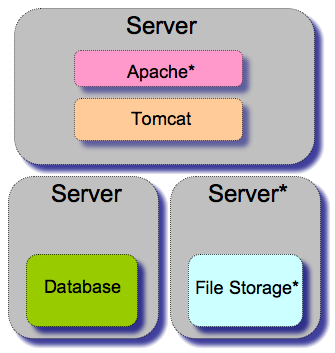

Thin client configuration

A "thin" client is simply a server which only runs the application component of Sakai, and which does not house any database or filesystem content. This solution allows institutions with existing database and distributed storage resources to take advantage of their existing infrastructure. It may be acceptable to run a limited demonstration or pilot with this configuration, but a load balanced solution is recommended for anything beyond that (see below).

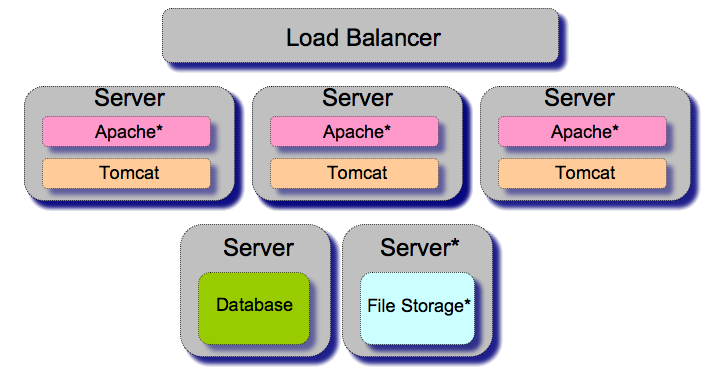

Load balanced thin client

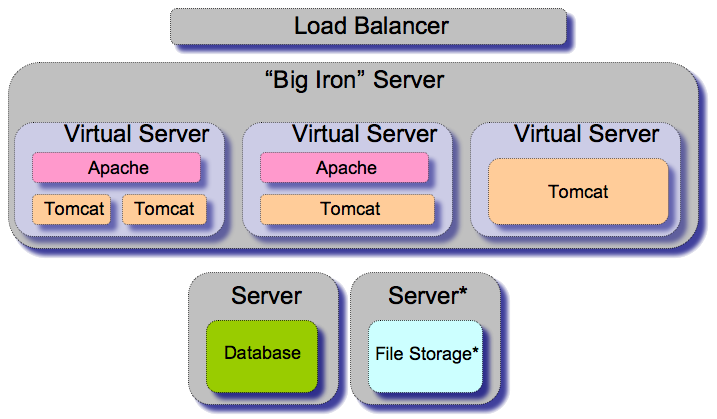

A load balanced collection of thin clients is one of the scalable options for Sakai. It offers load balancing to distribute large volumes of traffic. It also offers redundancy, which allows for the more seamless management of hardware failures and preventative maintenance. In this configuration, each application server is pictured as only running tomcat, which is the minimum required to run Sakai.

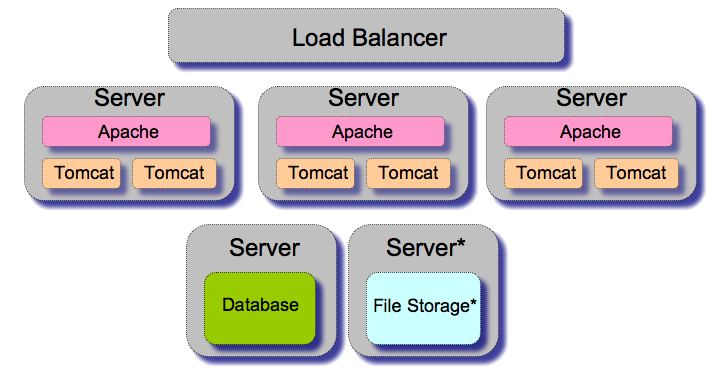

Thicker client

A "thicker" client is simply an application server that runs one or more tomcat instances behind an apache instance. Adding Apache allows you to take advantage of the configurability and maturity of Apache, and also to set up clients that contain more than one instance of tomcat. A key reason to consider this information is the hard limit of memory usage on 32-bit hardware. The 32-bit JVM is only capable of addressing around 2G of RAM, which is tight for a full Sakai installation. You can install multiple tomcats on a single application server, and thus take better advantage of the memory available.

Big Iron

A number of sites have chosen to run with a smaller number of more powerful servers (as few as one), which are divided up into a series of virtual machines using solutions like VMWare. Although this may create a single point of failure for instances with only one large server, it offers the ability to dynamically allocate CPU and RAM among multiple virtual machines. It also has great advantages for change management, as a single node can be updated, tested, and then cloned into the appropriate number of updated application servers. Also, the state of a node can be saved for rolling back major changes if problems are detected later on.

Load Balancing Solutions

Sites running sakai in production have chosen to handle load balancing in a multitude of ways. These can roughly be divided into software solutions, which run on existing hardware, and hardware solutions, which run software on dedicated hardware, typically provided by a commercial vendor.

Software Solutions

The simplest software solution is to use an instance of Apache to balance traffic between multiple tomcat installations using mod_jk (apache 1.3 and 2.0) or mod_proxy_ajp (apache 2.2 and higher). Apache 2.2 or higher also offer the ability to use mod_proxy_balancer to balance multiple apache or tomcat installations. For more details on setting up tomcat with mod_proxy_ajp, see the Sakai Admin Guide - Advanced Tomcat (and Apache) Configuration section of the admin guide.

Hardware Solutions

There are a number of dedicated solutions provided by commercial vendors such as Big5 Networks and Zeus. Some solutions offer only the ability to load balance HTTP traffic (comparable to mod_proxy_balancer), while others offer the ability to use AJP (comparable to mod_jk or mod_proxy_ajp). Any solution that provides compatible sticky sessions (see below) should be capable of working with Sakai.

Sticky Sessions and Sakai

Whatever load balancing solution you choose, you must ensure that each client remains on the same app server for the life of their session. There are multiple approaches for monitoring and maintaining sticky sessions. Solutions that use IP-based sessions have problems with virtual private networks and proxies. Solutions that use dedicated cookies seem to work best for most people, but may cause problems with web services calls and some DAV clients. Whichever method you choose, be aware that the sticky session timeout is one of the factors controlling how long idle sessions remain active, as users will be required to log in again if they are sent to a different node after their sticky session times out.

Cluster Options in the sakai.properties File

Key portions of Sakai are designed to be cluster-aware. There are a handful of settings in the sakai.properties file that control the way in which clustering is managed.

Property | Description |

|---|---|

expired@org.sakaiproject.cluster.api.ClusterService | The time in seconds after which an inactive server will be expired from the cluster. |

ghostingPercent@org.sakaiproject.cluster.api.ClusterService | The percentage of maintenance passes to run the full de-ghosting / cleanup activities, including delisting stale server nodes. |

refresh@org.sakaiproject.cluster.api.ClusterService | How often (in seconds) a server should register that it is still alive. |

Clustering and auto.ddl

There have been problems reported lately with installations that enable the auto.ddl property on multiple nodes in a cluster. There are some suggestions that auto.ddl should only be used for the initial installation, and that updates should be managed using the migration scripts included with each release. Others choose to allow one node to start up with auto.ddl enabled, and to allow that node to create new tables, update existing tables, and create indexes.

Performance Testing

When testing and configuring for performance, you will be focusing on modifying one or more of the following:

- your tomcat JVM settings

- your tomcat configuration

- your apache configuration

- your database configuration

- operating system settings

- load balancer settings

- the number of application servers allocated

The basic methodology prior to moving into production is to simulate an appropriate amount of load, monitor performance, and tune components appropriately. Once you are in production, the basic methodology is to monitor performance and make incremental adjustments as needed.

There are a handful of commercial and free tools available for generating load. Of the commercial tools available, the most commonly used in the Sakai community are WebLoad and LoadRunner. Of the free tools available, the most commonly used is JMeter. For more information on using JMeter, please visit: